You will hear people talking about 'the cloud'. The cloud is a major component of modern computing, yet the term is very vague and ephemeral, literally like the clouds. As a software engineer you need to know something of what the cloud is. Most of the software and services you work on will run there and the way you write code and build apps needs to take that final environment into consideration. In fact there are 12 factors that need considering when working on scalable services that will run in cloud environments. Our job is to understand why those 12 factors are important.

We are going to look at 4 topics that we think will give you a basic understanding of what the cloud is and how to think about and design the software that will run on in. We are going to look at:

The first thing to understand is virtualization. The idea is that on your computer your can create and use another computer that exists only in software. A virtual machine.

The place to start getting familiar with this idea is to install and use VirtualBox. We are going to provision and create a virtual raspberry pi in VirtualBox.

/dev/sda (ata-VBOX_HARDDISK...)This VM will behave like a distinct computer, for example it has it's own ip address on your network and you can install and run additional software on it.

If we take the trick of VirtualBox and apply it in a more extreme way we might wonder why we need a base operating system at all? A 'Bare Metal' hypervisor is installed in the place of an operating system i.e. Windows or Linux. The hypervisor is a thin layer of software that is installed on disc and exposes the motherboard's components to the abstraction of virtualization. All you get when you turn the computer on and plug in a monitor is an ip address on the screen. You have to use another computer to connect to the running hypervisor, and then you can start to make virtual machines on the 'bare metal' using a configuration client (like VMware's vSphere client).

Before virtualization you usually had one server dedicated to one service i.e. one server would run the company email. You would install something like Windows Server or a Linux distro and then install all the software for the email server. If you needed to update the server, or reboot, then the email service would go down for a period. With virtualization you can do much more with your hardware. You can run multiple operating systems and services on one piece of hardware, turn off or reboot one virtual machine without effecting the others.

That means you own 1 physical server, but you can run 4 virtual machines on that 1 piece of hardware.

The moment of separating the operating system from the hardware is a fundamental building block of cloud computing. Why do you think that might be?

If we want to group a number of computers together and get them to work as a team we need to start clustering them. In clusters computers are usually referred to as nodes. You can expect to find 2 different types of nodes in a cluster:

The manager nodes do the work of managing the cluster, labeling and keeping track of worker nodes and services. There might be a number of manager nodes so one can be swapped out if need be and everything will keep running. Same with worker nodes. In a cluster you want to be able to power down any node without impacting the services, and be able to then provision and join a replacement node into the cluster if you want to.

If you can replace nodes why can't you add them too? You can. This is called horizontal scaling. You start with a cluster of 3 nodes and as demand for your services increases you can match that demand by adding more nodes. For example in the autumn/fall Walmart has a spike in website traffic around 'black friday' so they anticipate the extra demand by scaling horizontally adding extra nodes to absorb the spike in traffic. After the holiday season Walmart don't need all those extra nodes as demand reduces, so to save the costs of keeping all that availability they can scale back and remove the extra nodes. You often here infrastructure set up like this described as 'elastic' or horizontally scalable.

We did something like this when we added agents to our GOCD server. We added agents (nodes) to leverage the computing power of all our devices collected together.

Clustered nodes can be virtual machines, or physical computers connected together. They are usually geographically co-located, but they don't have to be. The main performance benefit that a cluster provides is high availability. High availability is achieved through 'load balancing'.

The entry point into the cluster's network will know how busy each node is in the cluster. Traffic will be directed to the nodes with the most capacity. That means if a node starts to get really busy, the network routing will direct traffic to other nodes, giving the busy node time to complete it's work. This way the demand is spread evenly across all the nodes. Can you see how Walmart can cope with extra traffic now by adding extra nodes?

The other benefit of a cluster is redundancy. By doubling each service if one instance of a service fails or crashes there is another running instance that can keep the service going. When you need to update a service you can roll it out through the cluster one node at a time. That way the service never goes down. Even when you are upgrading! You are even able to physically migrate the service from one piece of hardware to another one node at a time without your service ever going down.

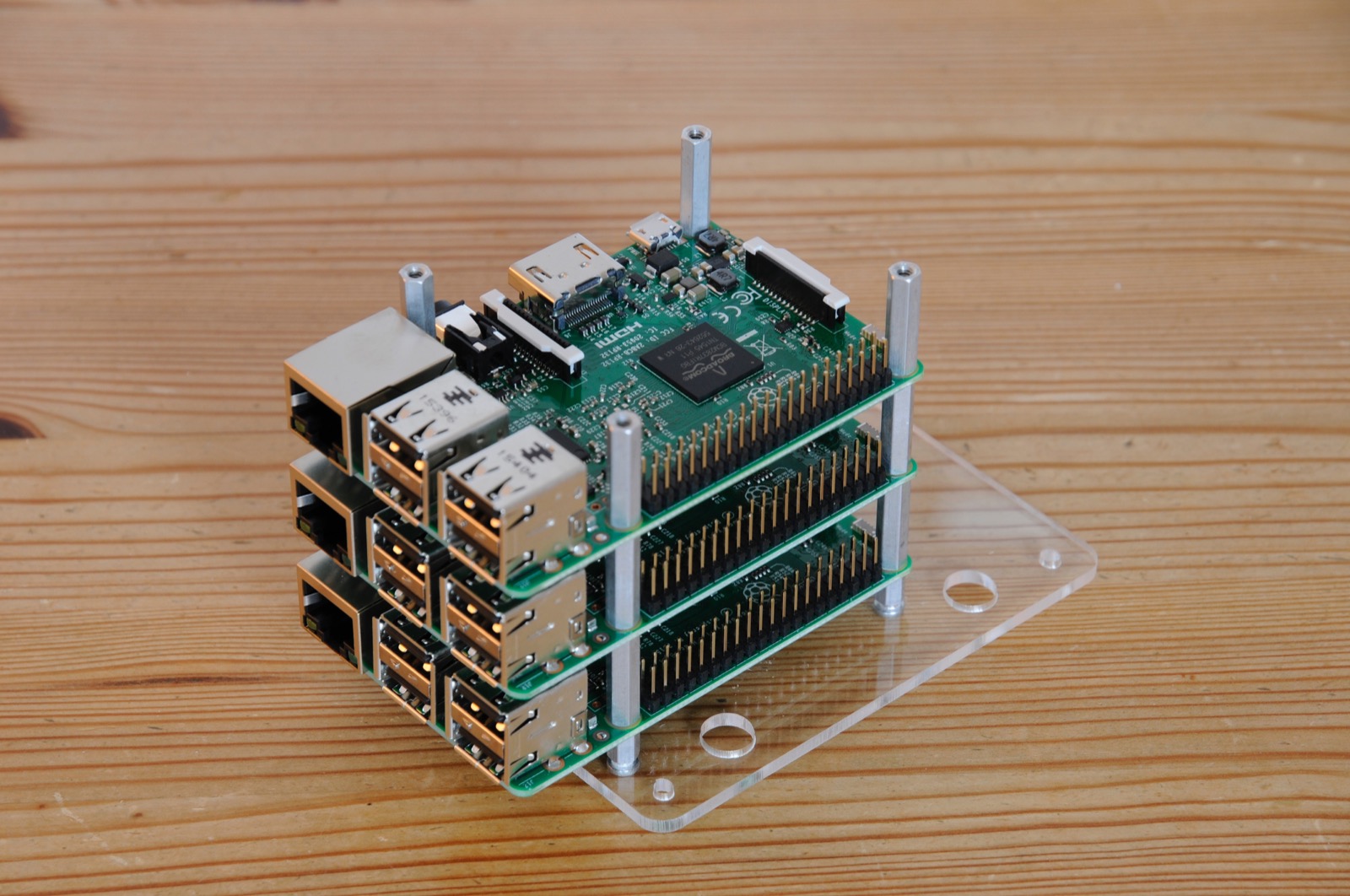

Below are 4 options for setting up your own cluster.

There are different ways you can create a cluster for yourself. You can pay for VMs on AWS, DigitalOcean, Azure or Scaleway (Scaleway for ARM based servers). Whatever service you use you'll need to

do the following:

ifconfig/etc/hosts and /etc/hostname i.e. 192.168.56.101 manager01vagrant plugin install vagrant-hostmanagerVagrantfile and add the following# -*- mode: ruby -*-

# vi: set ft=ruby :

$install_docker_script = <<SCRIPT

echo "Installing dependencies ..."

sudo apt-get update

echo Installing Docker...

curl -sSL https://get.docker.com/ | sh

sudo usermod -aG docker vagrant

SCRIPT

BOX_NAME = "ubuntu/xenial64"

MEMORY = "512"

MANAGERS = 2

MANAGER_IP = "172.20.20.1"

WORKERS = 2

WORKER_IP = "172.20.20.10"

CPUS = 2

VAGRANTFILE_API_VERSION = "2"

Vagrant.configure(VAGRANTFILE_API_VERSION) do |config|

#Common setup

config.vm.box = BOX_NAME

config.vm.synced_folder ".", "/vagrant"

config.vm.provision "shell",inline: $install_docker_script, privileged: true

config.vm.provider "virtualbox" do |vb|

vb.memory = MEMORY

vb.cpus = CPUS

end

config.hostmanager.enabled = true

config.hostmanager.manage_guest = true

config.hostmanager.ignore_private_ip = false

config.hostmanager.include_offline = true

#Setup Manager Nodes

(1..MANAGERS).each do |i|

config.vm.define "manager0#{i}" do |manager|

manager.vm.network :private_network, ip: "#{MANAGER_IP}#{i}"

manager.vm.hostname = "manager0#{i}"

if i == 1

#Only configure port to host for Manager01

config.vm.network :forwarded_port, guest: 80, host: 80

config.vm.network :forwarded_port, guest: 5432, host: 5432

config.vm.network :forwarded_port, guest: 2368, host: 2368

manager.vm.network :forwarded_port, guest: 8080, host: 8080

manager.vm.network :forwarded_port, guest: 5000, host: 5000

manager.vm.network :forwarded_port, guest: 9000, host: 9000

manager.hostmanager.aliases = %w(172.20.20.11 manager01)

else

manager.hostmanager.aliases = %w(172.20.20.12 manager02)

end

end

end

#Setup Woker Nodes

(1..WORKERS).each do |i|

config.vm.define "worker0#{i}" do |worker|

worker.vm.network :private_network, ip: "#{WORKER_IP}#{i}"

worker.vm.hostname = "worker0#{i}"

if i == 1

worker.hostmanager.aliases = %w(172.20.20.101 worker01)

else

worker.hostmanager.aliases = %w(172.20.20.102 worker02)

end

end

end

end

vagrant up will provision and start your clustervagrant ssh NODE_NAME i.e vagrant ssh manager01vagrant ssh manager01 init swarm docker swarm init --listen-addr 172.20.20.11:2377 --advertise-addr 172.20.20.11:2377vagrant ssh worker01 join workers with the token the above command createddocker swarm join-token manager

touch ssh in /boot folder on SD card)pinode01 (sudo nano /etc/hosts & sudo nano /etc/hostname)sudo curl -sSL https://get.docker.com | shsudo usermod -aG docker pidocker versionOnce you have your set of nodes ready select a 'manager' node and run the following docker command to initialise that node as the manager.

sudo docker swarm init --advertise-addr NODE1_IP_ADDRESS

Swap NODE1_IP_ADDRESS with the ip address of your manager node. You'll get a command to then run on your worker nodes (see below) so copy and paste this so your other worker nodes join the 'swarm' (Docker's name for a cluster). Your join token will look something like the one below.

docker swarm join --token SWMTKN-1-0b8xy6w_____SOME_TOKEN_HASH_____eyuy26luxxj9fdh9vz x.x.x.x:2377

If you want to join an additional manager node to your cluster use this command on your primary manager node to generate a manager join token.

docker swarm join-token manager

You've just created a cluster! To begin to appreciate what you have just done we are going to download and install a tool to help us monitor and manager our cluster. There is an open source project called Portainer that can help us with this. On the manager node run the following command to download the 'stack' file.

curl -L https://downloads.portainer.io/portainer-agent-stack.yml -o portainer-agent-stack.yml

If you cat portainer-agent-stack.yml you will see this is a docker compose file. However there are some extensions that make this a 'stack' file. Can you see the section labeled deploy: because we are going to run this docker compose file in a swarm (cluster) we have some extra config; like how many replicas of this service we will run, the particular type of node to run on, etc.

When you are ready deploy this stack into your cluster with the following command.

docker stack deploy -c portainer-agent-stack.yml portainer

If you are using https://labs.play-with-docker.com/ you'll see some ports will open at the top of your screen, click on the 9443 port - you might need to add https:// to the beginning of the URL to get it to load, its doing some fancy things with ssh tunnels and SSL is not going to work so ignore the warnings - your good - you know you are doing something a little funky.

If you have a cluster of VMs visit https://NODE1_IP_ADDRESS:9000 where NODE1_IP_ADDRESS is the ip address of your manager node.

Now we have a cluster we can start to use it to run our apps and services. Because we are using Docker Swarm we can use Docker compose files to define our services. Because we are using Docker Swarm those Docker compose files are called 'stacks'. We want our Ghost blog to run on a number of nodes and for those nodes to be communicating on the same virtual network. So we are going to start by making a network for our Ghost Blog service to run on.

docker network create --driver overlay ghost_network

Create a ghost.yml file on your primary manager node. Add the following 'stack' into the file and save it.

version: '3.7'

services:

blog:

image: ghost:4-alpine

depends_on:

- db

ports:

- "2368:2368"

environment:

database__client: mysql

database__connection__host: db

database__connection__user: ghostusr

database__connection__password: ghostme

database__connection__database: ghostdata

deploy:

replicas: 1

networks:

- ghost_network

db:

image: mysql:5.7

ports:

- "3306:3306"dock

environment:

MYSQL_ROOT_PASSWORD: example

MYSQL_USER: ghostusr

MYSQL_PASSWORD: ghostme

MYSQL_DATABASE: ghostdata

deploy:

replicas: 1

placement:

constraints:

- "node.role==manager"

networks:

- ghost_network

networks:

ghost_network:

external: true

You should see the database spins up ok but if the ghost blog does not start properly. You will have to provision the database. You can use Portainer to help with this. Find the database container. Access the shell in the container and enter the database with mysql -u root.

If you need to create the ghostusr and database. Run the following sequence of SQL commands and exit the container.

DROP USER 'ghostusr'@'%';

FLUSH PRIVILEGES;

CREATE USER 'ghostusr'@'%' IDENTIFIED BY 'ghostme';

CREATE DATABASE ghostdata;

GRANT ALL PRIVILEGES ON ghostdata.* TO 'ghostusr'@'%';

FLUSH PRIVILEGES;

Once this is set on the database without doing anything else the ghost blog container should start up ok. Visit localhost or NODE_IP_ADDRESS. Have a click around. Our blog site gets really popular! Now we want to horizontally scale out site. Instead of having just 1 instance of our blog running we can increase it to 3, we should then see 1 instance on each node in our cluster.

Update the ghost.yml file to include the following section in the services -> blog definition:

services:

blog:

# other stuff here

deploy:

replicas: 3

db:

deploy:

replicas: 2

Try it.

If only it were that simple. Visit localhost:2368/about/edit and you will be prompted to create an account or create a post. Those are written to the database. Mysql uses the file system to 'store' data. If we scale the database over 2 nodes what will happen to our data?

2 nodes, 2 discreet file systems there is no way the data will stay in sync. We might create a post and save it in one database on one node, but when we go to update it and we are on the other node, it won't find our post in it's database. It will break. It will be buggy and glitchy.

Now we have created our own cloud environment. We can deploy and scale our applications across multiple nodes! You now know you can't just replicate multiple instances of a service across nodes and have it just work. Any app that writes or reads from disc might not scale well. For example, wordpress writes your configuration into the file system, and also a database. You've seen the glitchy behavior in the ghost blog example. This is because the underlying file system is on separate computers. I might write some config to the file system on one computer, but my next request for another page in the cluster bounces me to a different computer - and I've lost my state.

For our cluster to work and for us to be able to scale apps we need to also abstract the file system we are using and have it replicated on each node. For this we'll use GlusterFS. What we are going to do on each node is create the same folder i.e. /home/vagrant/vols. Next we are going to install GlusterFS and create a 'volume' which will represent this folder in an abstraction. We can treat the 'volume' as if it were a single folder on a single computer. That way we can just include it in our docker stack file as a mounted volume. The containers think they are writing to disc. Gluster is intercepting the reads and writes and managing to sync the same arrangement and content of files and folders to all the nodes in the cluster.

sudo add-apt-repository ppa:gluster/glusterfs-3.10 && sudo apt-get update - add glusterfs-server to the apt-get repositorysudo apt-get install glusterfs-server -y - install GlusterFSsudo systemctl start glusterfs-server && sudo systemctl enable glusterfs-server - Configure the service to run when the machine bootsmkdir -p ~/vols/content && mkdir ~/vols/mysqlsudo gluster peer probe worker01 repeat for all the other nodes.sudo gluster volume create vols replica 4 manager01:/home/vagrant/vols manager02:/home/vagrant/vols worker01:/home/vagrant/vols worker02:/home/vagrant/vols forcesudo gluster volume start volssudo mount.glusterfs localhost:/vols /mntlocalhost:/vols /mnt glusterfs defaults,_netdev,backupvolfile-server=localhost 0 0 to /etc/fstabWant to see what you just did? SSH into a node and create a file in /mnt/content/hello.py. Now go look in another node in the same spot. Now we have an area of the file system that will be synced across all our nodes. Lets put the database there. Update your ghost.yml to include volumes: as below.

services:

blog:

# other stuff here

volumes:

- "/mnt/content:/var/lib/ghost/content"

db:

# other stuff here

volumes:

- "/mnt/mysql:/var/lib/mysql"

Problems mysql wants to write logs. From the file system point of view it looks like 2 different mysql processes are trying to write to the same file 🤦♀️. When process 1 writes to the log file it 'locks' the file. When process 2 tries to write to the log file, it can't it is locked out 🤬. For now scale back the database to 1 replica. If you are interested and want to scale your mysql instances you need to ready about replication in the docs. We need to think about logging too!

Below is a final ghost.yaml file that you should be able to deploy and use in your swarm.

version: '3.7'

services:

blog:

image: ghost:4-alpine

depends_on:

- db

ports:

- "2368:2368"

environment:

database__client: mysql

database__connection__host: db

database__connection__user: ghostusr

database__connection__password: ghostme

database__connection__database: ghostdata

deploy:

replicas: 3

networks:

- ghost_network

volumes:

- "/mnt/content:/var/lib/ghost/content"

db:

image: mysql:5.7

ports:

- "3306:3306"

environment:

MYSQL_ROOT_PASSWORD: example

MYSQL_USER: ghostusr

MYSQL_PASSWORD: ghostme

MYSQL_DATABASE: ghostdata

deploy:

replicas: 1

placement:

constraints:

- "node.role==manager"

networks:

- ghost_network

volumes:

- "/mnt/mysql:/var/lib/mysql"

networks:

ghost_network:

external: true

The point of introducing you to cloud computing like this is so you get to see some of the powers and problems of cloud computing. Developers targeting deployment in clustered environments like this have developed a set of guidelines to make the elastic scaling of apps easier to manage. We think you should know about these 12 factors they will influence your design choices and the way you structure your features and solutions in your final workplace project.

What do these 12 factors mean to you? We'd like you to contrast the 12 factors with what you do in your workplace. You can make a video, a blog post or presentation that describes the 12 factors and how your teams follow or don't follow them.